Man-made by Computer:

On-the-Fly Fine Texture 3D Printing

ACM Symposiumon on Computational Fabrication 2021

aShandong University bBen-Gurion University of the Negev

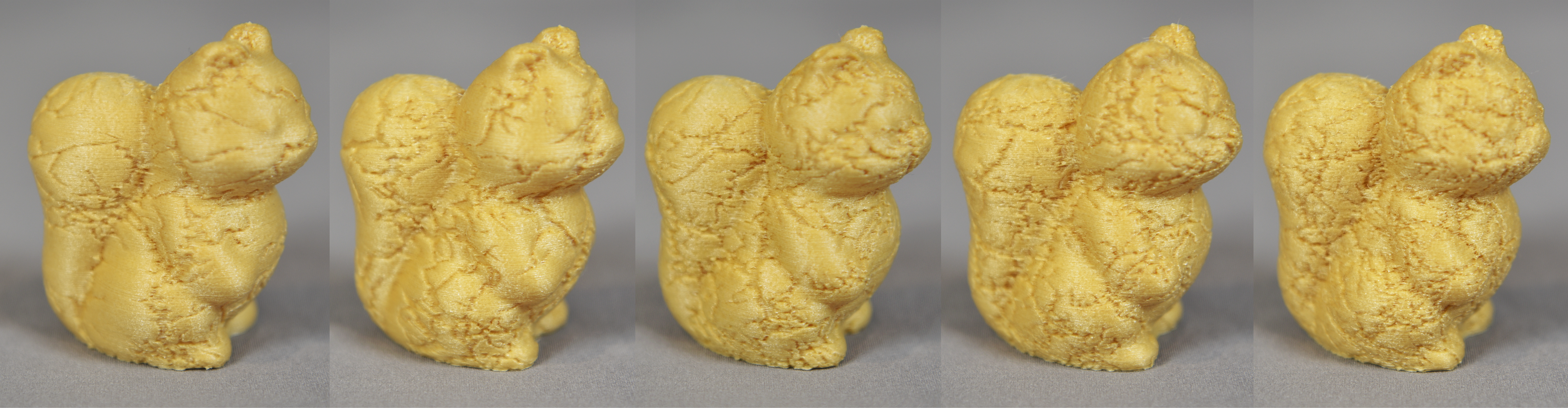

3D printed squirrel textured by our G-code modifications with variations of cracks simulating random cracks in a natural material.

Abstract

Applying textures to 3D models are means for creating realistic looking objects. This is especially important in the 3D manufacturing domain as manufactured models should ideally comprise a natural and realistic appearance. Nevertheless, natural material textures usually consist of dense patterns and fine details. Their embedding onto 3D models is typically cumbersome, requiring large processing time and resulting in large size meshes. This paper presents a novel approach for direct embedding of fine scale geometric textures onto 3D printed models by on-the-fly modification of the 3D printer’s head. Our idea is to embed 3D textures by revising the 3D printer’s G-code, i.e., incorporating texture details through modification of the printer’s path. Direct manipulation of the printer’s head movement allows for fine-scale texture mapping and editing on-the-fly in the 3D printing process. Thus, our method avoids the computationally expensive texture mapping, mesh processing and manufacturing preprocessing. This allows embedding detailed geometric textures of unlimited density which can model manual manufacturing artifacts and natural material properties. Results demonstrate that our direct G-code textured models are printed robustly and efficiently in both space and time compared to traditional methods.

Video

More Results

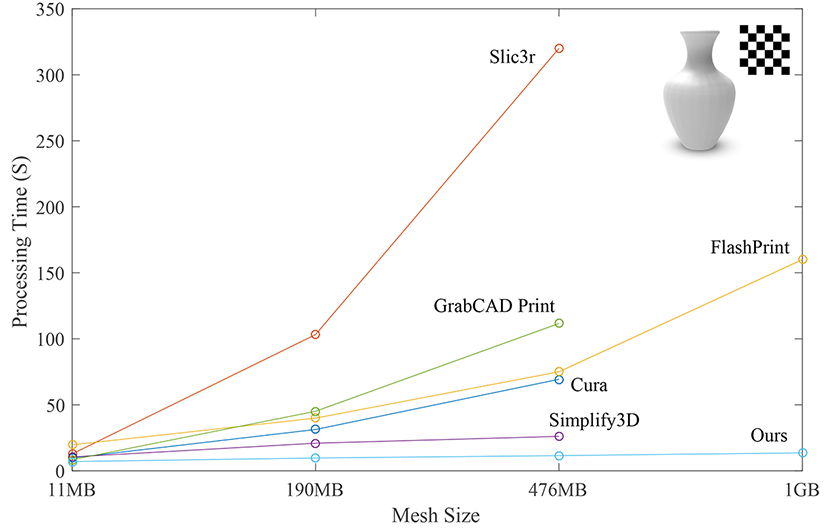

Performance comparison (processing time vs. mesh size) between 5 state-of-the-art 3D manufacturing tools and ours. Since our method does not have mesh representation during texture embedding, the mesh size here is an approximation by using the texture synthesised in our pipeline and perfrom texture baking in Maya® to get the corresponding mesh.

Downloads

|

|

|

|

|

| Paper (37.2MB) | Paper (Compacted Version 1.3MB) | Video (36.7MB) | G-code files (11.4MB) | Presentations (97.2MB) |

Acknowledgement

We thank all the anonymous reviewers for their valuable comments and constructive suggestions. This work is supported by grants from NSFC (61972232). Special thanks to Prof. Daniel Cohen-Or for inspiring discussions on this project.

BibTex

@inproceedings{Yan2021,

author = {Yan, Xin and Lu, Lin and Sharf, Andrei and Yu, Xing and Sun, Yulu},

title = {Man-Made by Computer: On-the-Fly Fine Texture 3D Printing},

year = {2021},

isbn = {9781450390903},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3485114.3485119},

doi = {10.1145/3485114.3485119},

booktitle = {Symposium on Computational Fabrication},

articleno = {6},

numpages = {10},

keywords = {geometric texture embedding, G-codes, meshless},

location = {Virtual Event, USA},

series = {SCF '21}

}